AI Agents Write Your Code, But Who's Watching the Supply Chain?

Spotify's best engineers haven't written a single line of code since December. Anthropic says 70-90% of its own codebase is AI-generated. Claude Code just crossed $2.5 billion in annualized revenue. If you ship software in 2026, an AI agent almost certainly touched your latest commit.

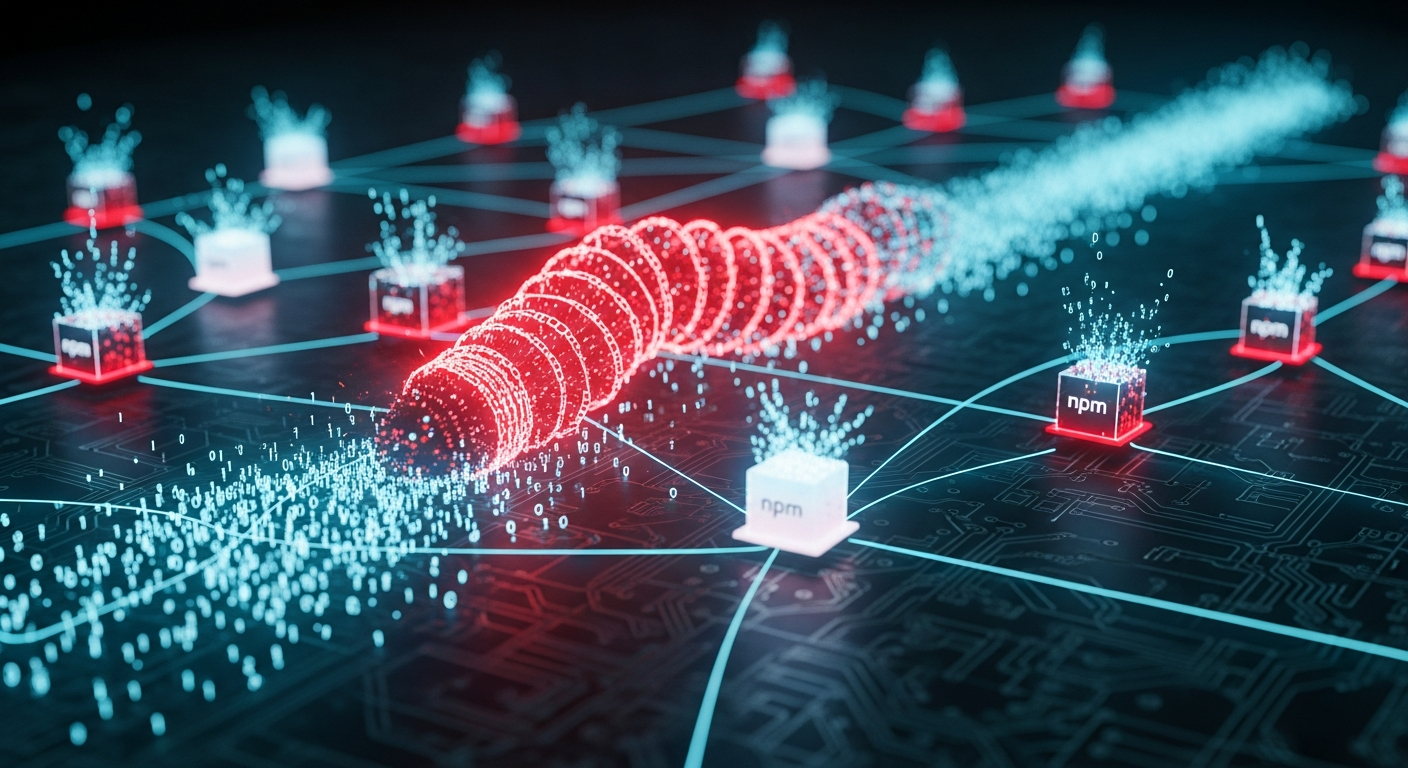

Meanwhile, a self-replicating worm called Shai-Hulud infected 796 npm packages (over 20 million weekly downloads) by hijacking developer credentials and autonomously publishing poisoned versions of legitimate libraries. Last month, the n8n automation platform got hit with a supply chain attack that stole OAuth tokens through fake community nodes.

I keep thinking about where these two trends intersect. We're writing code faster than ever, but the supply chain security around that code hasn't kept up. Not even close.

Speed creates blind spots

The pitch for AI coding agents is speed. Claude Code's agent teams split tasks across parallel instances. GitHub now runs Claude and Codex directly inside Copilot. The experience feels more like directing an engineering squad than writing code yourself.

But when a human writes code, they (usually) read the imports. They recognize unfamiliar package names. They notice when a dependency bumps a major version unexpectedly. When an AI agent scaffolds an entire feature in 90 seconds, those micro-decisions happen at machine speed, with no human reviewing each npm install.

I've caught myself doing exactly this. Ship the feature, glance at the diff, move on. The dependency changes? Those get maybe two seconds of attention.

How Shai-Hulud actually works

Shai-Hulud isn't a typical malicious package. It's the first self-replicating supply chain attack on npm. The chain goes like this:

- A developer installs a compromised package

- The worm steals their npm authentication tokens

- It identifies every other package the developer maintains

- It injects malicious code and publishes new versions automatically

- Each newly infected package repeats steps 2-4

The second wave (Shai-Hulud 2.0, November 2025) added something worse: if it can't spread further, it erases your home directory. Not your node_modules. Your entire home directory.

640 packages in the first wave. 796 in the second. The n8n attack showed the same pattern from a different angle. Community nodes that look like real integrations, but run with full access to credentials, environment variables, and the filesystem. No sandboxing. No isolation.

Why AI agents make this worse

AI agents generate functional code that passes tests. They're terrible at supply chain judgment calls. An agent told to "add Google Ads integration to this n8n workflow" will install n8n-nodes-hfgjf-irtuinvcm-lasdqewriit if it matches the integration description. It won't flag the suspicious package name. It won't check the publisher's history. It won't notice the package was published three hours ago.

That's not a hypothetical. That's the actual attack vector from the n8n campaign.

This is the part that unsettles me. I use Claude Code daily. I trust it to generate solid application code. But supply chain decisions require a different kind of judgment, one that involves reputation, timing, and context that models don't have access to yet.

Three defenses worth implementing

1. Lock your dependencies and actually enforce it

Use npm ci instead of npm install in CI/CD. Pin exact versions in package-lock.json. If you use Renovate or Dependabot, require manual approval for major version bumps. AI agents should never run npm install <package> in production pipelines without a human checkpoint.

2. Audit dependencies, not just logic

Code review in 2026 can't just mean "does this logic work?" It needs to include: what new dependencies were added, who published them, and when? Tools like Socket.dev and Endor Labs flag suspicious packages before they enter your lockfile. Add them to your CI pipeline.

3. Scope your agent's permissions

Don't give Claude Code or any agentic tool your npm publish token. Don't let it access credentials it doesn't need. Treat AI agents like any other automated system: principle of least privilege. The n8n attack worked specifically because community nodes had the same access level as the n8n runtime itself.

What I'm taking away from this

- AI-generated code is moving faster than supply chain security can adapt

- Shai-Hulud proved that npm's trust model (publish access = permanent access) breaks down when autonomous agents enter the picture

- The n8n attack showed that "community ecosystem" features without sandboxing are open doors for credential theft

- Review dependency changes with the same care you give logic changes

- Treat AI agents as untrusted automation: restrict their permissions and never give them publish credentials

The agents write solid code. But the dependency tree they build? That's still on you.

References: